China’s Tech Giants Race to Replace Nvidia’s AI Chips

For over a decade, Nvidia’s GPUs have been the core of China’s AI ecosystem. These chips power everything from search engines and video apps to smartphones, electric vehicles, and the latest generative AI models. Despite Washington’s tightening of export controls on advanced AI chips, Chinese companies continued to purchase “China-only” versions of Nvidia’s GPUs, such as the H800, A800, and H20, which lacked the most advanced features.

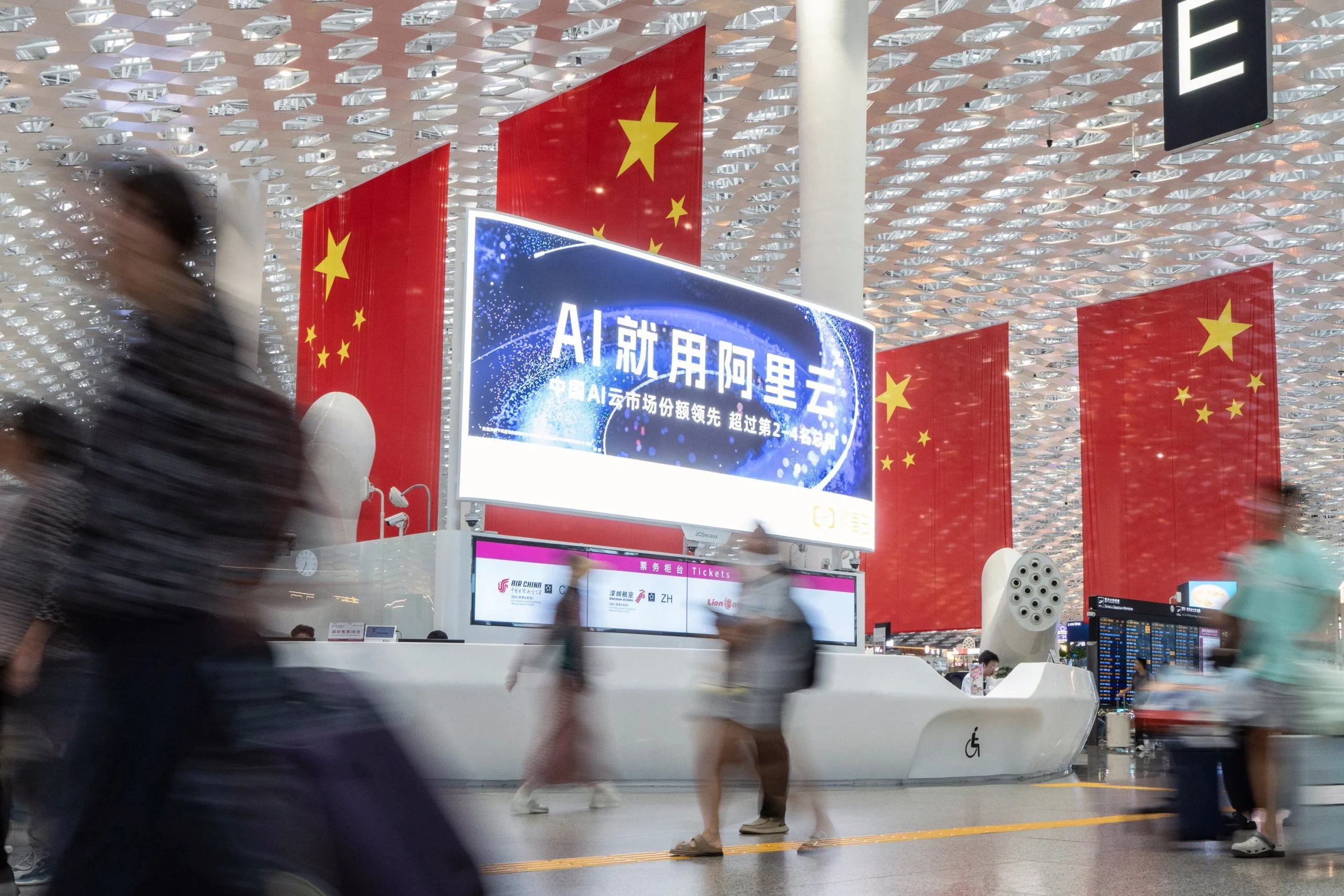

However, by 2025, Beijing’s patience appeared to run out. Chinese state media began labeling Nvidia’s China-compliant H20 chip as unsafe and potentially compromised with hidden “backdoors.” Regulators summoned Nvidia executives for questioning. Reports from The Financial Times revealed that major tech firms like Alibaba and ByteDance were quietly instructed to cancel new Nvidia GPU orders. In August, the Chinese AI startup DeepSeek indicated that its next AI model would be designed to run on China’s “next-generation” domestic AI chips.

The message was unmistakable: China could no longer rely on a U.S. supplier for its AI future. If Nvidia could not or would not sell its best hardware in China, domestic alternatives had to fill the gap. These alternatives would need to design specialized chips for both AI training—building models—and AI inference—running them. Achieving this is challenging. Nvidia’s chips set the global standard for AI computing power, requiring not only raw silicon performance but also advanced memory, interconnection bandwidth, software ecosystems, and large-scale production capacity.

Several contenders have emerged as China’s best hope: Huawei, Alibaba, Baidu, and Cambricon. Each company represents a different approach to reinventing China’s AI hardware stack.

Huawei Leads China’s Tech Giants in AI Chip Development

Huawei, one of China’s largest tech companies, appears to be the natural successor if Nvidia is excluded. Its Ascend line of AI chips has matured despite U.S. sanctions. In September 2025, Huawei revealed a multi-year roadmap for its Ascend chips.

The Ascend 950, expected in 2026, aims for 1 petaflop performance in the FP8 format commonly used in AI chips. It will feature 128 to 144 gigabytes of on-chip memory and interconnect bandwidths up to 2 terabytes per second. The Ascend 960, due in 2027, is projected to double the 950’s capabilities. Further ahead, the Ascend 970 promises significant improvements in compute power and memory bandwidth.

Currently, Huawei offers the Ascend 910B, introduced after U.S. sanctions cut off access to global suppliers. This chip is roughly comparable to Nvidia’s A100 from 2020 and became the default option for companies unable to obtain Nvidia GPUs. A Huawei official claimed the 910B outperformed the A100 by about 20 percent in some training tasks in 2024. However, it still uses older high-speed memory (HBM2E), holds about one-third less data in memory, and transfers data between chips approximately 40 percent more slowly than Nvidia’s H20.

Huawei’s latest innovation is the 910C, a dual-chiplet design combining two 910Bs. In theory, this design approaches the performance of Nvidia’s H100 chip, which was Nvidia’s flagship until 2024. Huawei demonstrated a 384-chip Atlas 900 A3 SuperPoD cluster delivering roughly 300 petaflops of compute, implying each 910C can deliver just under 800 teraflops in FP16 calculations. Although this is still below the H100’s roughly 2,000 teraflops, it is sufficient to train large-scale models when deployed at scale. Huawei has detailed how it used Ascend AI chips to train models similar to DeepSeek’s.

To overcome single-chip performance gaps, Huawei is focusing on rack-scale supercomputing clusters that pool thousands of chips for massive computing power gains. Building on the Atlas 900 A3 SuperPoD, Huawei plans to launch the Atlas 950 SuperPoD in 2026, linking 8,192 Ascend chips to deliver 8 exaflops of FP8 performance. This cluster will have 1,152 terabytes of memory and 16.3 petabytes per second of interconnect bandwidth, occupying a space larger than two basketball courts. The Atlas 960 SuperPoD, planned for the future, will scale up to 15,488 Ascend chips.

Huawei is not only developing hardware. Its MindSpore deep learning framework and CANN software aim to lock customers into its ecosystem, providing a domestic alternative to Meta’s PyTorch and Nvidia’s CUDA platforms. State-backed firms and U.S.-sanctioned companies like iFlytek, 360, and SenseTime have already become Huawei clients. Chinese tech giants ByteDance and Baidu have also ordered small batches of Huawei chips for testing.

Despite these advances, Huawei faces challenges. Chinese telecom operators such as China Mobile and Unicom, responsible for building China’s data centers, remain cautious about Huawei’s influence. They often prefer to mix GPUs and AI chips from various suppliers rather than fully commit to Huawei. Large internet platforms also worry that partnering too closely with Huawei could give it leverage over their intellectual property. Still, Huawei is better positioned than ever to challenge Nvidia.

Alibaba, Baidu, and Cambricon Join China’s Tech Giants in AI Chip Development

Alibaba’s chip unit, T-Head, founded in 2018, initially focused on open-source RISC-V processors and data center servers. Today, it is one of China’s most aggressive competitors to Nvidia. T-Head’s first AI chip, the Hanguang 800, announced in 2019, is an efficient AI inference chip capable of processing 78,000 images per second and optimizing recommendation algorithms and large language models. Built on a 12-nanometer process with about 17 billion transistors, it performs up to 820 trillion operations per second and accesses memory at around 512 gigabytes per second.

Alibaba’s latest design, the PPU chip, is a direct rival to Nvidia’s H20. It features 96 gigabytes of high-bandwidth memory and supports high-speed PCIe 5.0 connections. During a state-backed television program showcasing a China Unicom data center, the PPU was presented as capable of matching Nvidia’s H20. Reports indicate this data center runs over 16,000 PPUs out of 22,000 chips in total. Alibaba has also been using its AI chips to train large language models. Additionally, Alibaba Cloud recently upgraded its supernode server, Panjiu, which now includes 128 AI chips per rack, a modular design for easy upgrades, and fully liquid cooling. For Alibaba, developing its own chips is as much about maintaining cloud dominance as it is about national policy, ensuring reliable access to training-grade AI chips.

Baidu’s AI chip journey began well before the current AI boom. Since 2011, Baidu experimented with field-programmable gate arrays to accelerate deep learning for search and advertising. This effort evolved into the Kunlun chip line. The first generation, Kunlun 1, launched in 2018, was built on Samsung’s 14-nanometer process and delivered around 260 trillion operations per second with a peak memory bandwidth of 512 gigabytes per second. Kunlun 2 followed in 2021 with modest upgrades, fabricated on a 7-nanometer node, delivering 256 trillion operations per second for low-precision calculations and 128 teraflops for FP16, while reducing power consumption. Baidu spun off Kunlun into an independent company, Kunlunxin, valued at $2 billion.

In 2025, Baidu revealed a 30,000-chip cluster powered by its third-generation P800 processors. Each P800 chip reportedly delivers roughly 345 teraflops at FP16, comparable to Huawei’s 910B and Nvidia’s A100, with interconnect bandwidth close to Nvidia’s H20. Baidu claims the system can train “DeepSeek-like” models with hundreds of billions of parameters. Baidu’s latest multimodal models, the Qianfan-VL family, were trained on Kunlun P800 chips. Kunlun chips have also secured orders worth over 1 billion yuan for China Mobile’s AI projects, boosting Baidu’s stock by 64 percent this year. Baidu plans to release a new AI chip product every year for the next five years, with the M100 arriving in 2026 for large-scale inference and the M300 in 2027 for training and inference of massive multimodal models.

Cambricon, another key player, struggled in the early 2020s. Its MLU 290 chip could not compete with Nvidia’s offerings. Founded in 2016 from the Chinese Academy of Sciences, Cambricon’s roots go back to 2008 research on brain-inspired processors. Initially focused on neural processing units for mobile devices and servers, Cambricon lost Huawei as a flagship partner when Huawei developed its own chips. The company expanded into edge and cloud accelerators with backing from Alibaba, Lenovo, iFlytek, and state-linked funds. Cambricon went public on Shanghai’s STAR Market in 2020 with a $2.5 billion valuation.

The following years were difficult, with falling revenues and investor pullback. However, by late 2024, Cambricon returned to profitability, largely due to its MLU series. The MLU 290 was built on a 7-nanometer process with 46 billion transistors, designed for hybrid training and inference with scalable interconnect technology. The MLU 370 improved performance to 96 teraflops at FP16. The MLU 590, released in 2023, delivered peak performance of 345 teraflops at FP16 and introduced support for FP8 data formats, improving efficiency. This chip helped turn Cambricon’s finances around. The MLU 690, currently in development, is expected to approach or rival Nvidia’s H100 in some metrics, with denser compute cores, stronger memory bandwidth, and enhanced FP8 support. If successful, Cambricon could become a genuine global competitor rather than just a domestic alternative.

Cambricon still faces challenges, including smaller production scale compared to Huawei and Alibaba and buyer caution due to past instability. Nevertheless, its recent comeback symbolizes that China’s domestic chip development can produce profitable, high-performance products.

The Geopolitical Stakes Behind China’s Tech Giants’ AI Chip Race

At its core, the struggle over Nvidia’s role in China is not just about teraflops or bandwidth. It is about control. Washington views chip restrictions as a way to protect national security and slow China’s AI progress. Beijing sees rejecting Nvidia as a way to reduce strategic vulnerability, even if it means temporarily accepting less powerful hardware.

China’s major players—Huawei, Alibaba, Baidu, and Cambricon—along with smaller companies like Biren, Muxi, and Suiyuan, do not yet offer true substitutes for Nvidia’s best chips. Most of their products are comparable to Nvidia’s A100, which was the top chip five years ago, and they are still working to catch up with the H100, available three years ago.

Each company bundles its chips with proprietary software and platforms. This strategy could force Chinese developers, accustomed to Nvidia’s CUDA, to spend more time adapting AI models, potentially affecting both training and inference. For example, DeepSeek’s development of its next AI model has reportedly been delayed due to efforts to run more training and inference on Huawei’s chips.

The question is not whether Chinese companies can build AI chips—they clearly can. The real question is whether and when they can match Nvidia’s combination of performance, software support, and user trust. On that front, the outcome remains uncertain.

One thing is clear: China no longer wants to play second fiddle in the world’s most critical technology race. The country’s tech giants are racing to replace Nvidia’s AI chips, determined to build a self-reliant future in artificial intelligence hardware.

For more stories on this topic, visit our category page.

Source: original article.